Audit-first AI for claims document workflows.

I build document intelligence that turns claim PDFs into structured outputs—with provenance, quality gates, and a fast review console for exceptions.

- Cut review time by routing only risky cases to humans

- Make outputs defensible with evidence links to source pages

- Ship pragmatically — prototype → measurable runs → production

Prefer async? Email me

10+

Years in insurance tech

3

Live production systems

100%

Evidence-linked outputs

Featured Work

View all →Systems I ship when reliability matters more than demos.

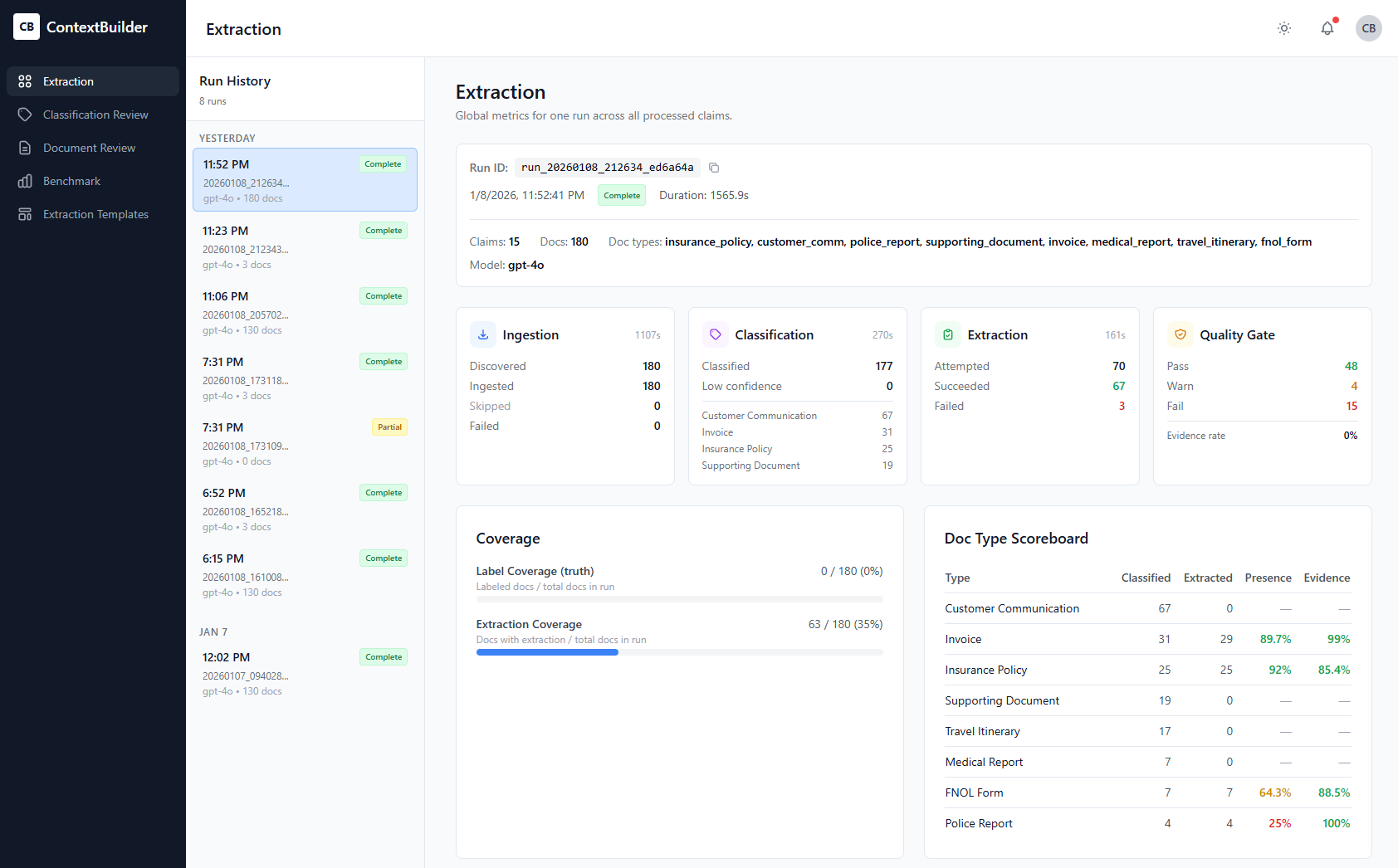

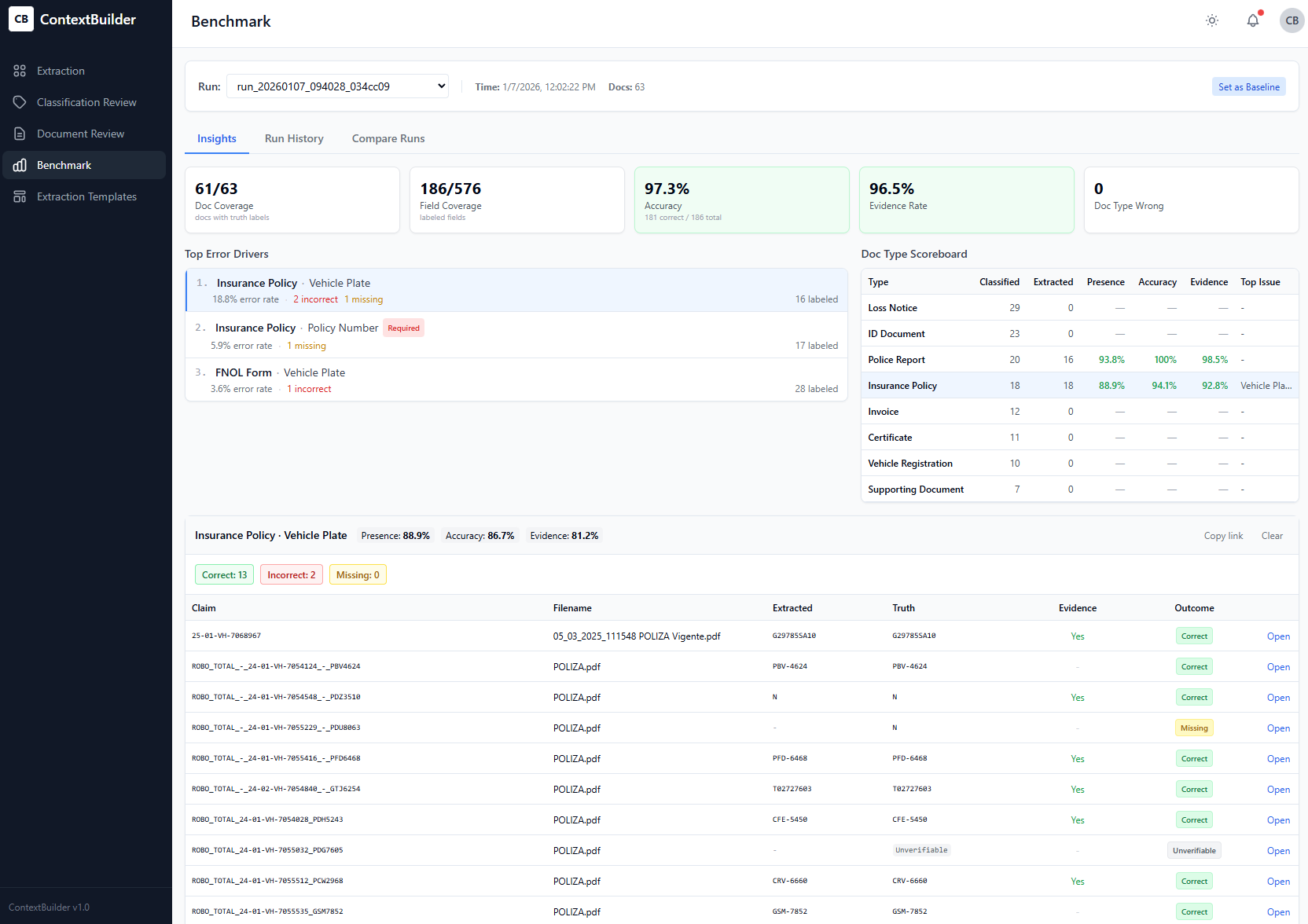

ClaimEval

QA copilot for claims review

Outcome: Error drivers + benchmark drilldown

Agentic Context Builder

Reliable LLM context pipelines

Outcome: Evidence-first context packs

Prompt Management Playbook

Versioned, testable prompts

Outcome: Prompt versioning + governance pack

See it running

Try the live demos directly - no signup required

How it works (in one view)

Most AI demos stop at "it extracted something." I optimize for what matters in claims ops: traceable fields, quality gates, and a review console that makes exceptions fast.

- 1 Ingest messy document packs (PDFs, images, emails)

- 2 Extract structured fields with source citations

- 3 Validate against rules and flag exceptions

- 4 Review edge cases in a human console

Writing

View all →Short notes on building audit-friendly AI in regulated workflows—prompts, provenance, evaluation, and pragmatic delivery.

Audit-first AI: what it means in practice

Most AI demos ignore the question regulators and QA teams actually ask: 'How do I know this is right?' Here's how to build systems that answer it.

Jan 10, 2025

Evidence-first extraction: the missing layer in LLM apps

LLMs can extract data from documents. But without evidence linking, you've just built a fancy black box. Here's the layer most teams skip.

Jan 8, 2025

Benchmarks that drive action: error drivers, not vibes

Accuracy percentages are useless without knowing what's failing and why. Here's how to build benchmarks that actually improve your system.

Jan 5, 2025